Customizations for Data Engineers - Data Integration

User story: As a Data Engineer for the OOP Application I would like to restrict the tables in the data model so that the data load time is reduced significantly.

Business Impact / Added Value | High Technical requirement for large data-models to be able to use the app including tasks/task augmentation capabilities |

Technical Complexity | Medium - Data Integration: Creating SQL scripts to filter VBAK/VBAP/_CEL_O2C_ACTIVITY - Studio: Add information to the Knowledge Model affecting App data and global filters |

Impacted by Customizations | Low Order Status Tables are SAP ECC standard and should not be impacted by customizations |

Although the required tables are not yet included in the Data Model from the Standard Connector, we highly encourage you to transition onto this version. Besides technical advantages during loading times, enabling faster load cycles, it brings several business relevant impacts. There are other inefficiencies that can be enabled using the new tables VBUP and VBUP_DEL.

The business logic for open orders checks the order and delivery status where neither the delivery/order is fully finished, nor rejected.

Important

By default, the SAP ECC O2C Connector only extracts VBUK/VBUP for Orders. To make this statement work you need to remove the extraction join from these two (in Data Jobs or Replication Cockpit). Customers please reach to their Customer Value Manager or implementation partner.

The best way to implement this is by applying the open orders filter in the SQL transformations on the tables VBAP, VBAK, and _CEL_O2C_ACTIVITES.

Important

A common problem with long running transformations and slow data model loads is due to missing statistics in Vertica. This issue can be resolved by adding the Vertica ANALYZE_STATISTICS statement directly in the SQL. For more information, refer to Vertica Transformations Optimization.

Create a new data job including three transformations:

Create O2C_VBAP_OPS:

DROP TABLE IF EXISTS O2C_VBAP_OPS; CREATE TABLE O2C_VBAP_OPS AS ( SELECT DISTINCT VBAP.* FROM O2C_VBAP AS VBAP JOIN O2C_VBUP AS VBUP AND VBAP.MANDT = VBUP.MANDT AND VBAP.VBELN = VBUP.VBELN AND VBAP.POSNR = VBUP.POSNR LEFT JOIN "O2C_VBFA_V" AS "VBFA" AND VBAP.POSNR = VBFA.POSNV AND VBAP.VBELN = VBFA.VBELV AND VBAP.MANDT = VBFA.MANDT LEFT JOIN "O2C_LIPS" AS "LIPS" AND "VBFA"."MANDT" = "LIPS"."MANDT" AND "VBFA"."VBELN" = "LIPS"."VBELN" AND "VBFA"."POSNN" = "LIPS"."POSNR" LEFT JOIN VBUP AS VBUP_DEL AND "VBUP_DEL"."MANDT" = "LIPS"."MANDT" AND "VBUP_DEL"."VBELN" = "LIPS"."VBELN" AND "VBUP_DEL"."POSNR" = "LIPS"."POSNR" WHERE (-- 0) Make sure to use your brakets correctly (-- 1) order item is not yet completed nor rejected VBUP.GBSTA IS NOT NULL AND VBUP.GBSTA !='C' AND VBUP.ABSTA != 'C' ) OR -- 2) order item is completed, but DN item is not yet completed nor rejected but not not-relevant (i.e. not null) —- 2.1) it exists -- 2.2) open DN item —- 2.3) which is not rejected —- Use COALESCE() to exchange with '' if not existent yet, as NULL included would lead condition to not being applied ( VBUP_DEL.GBSTA IS NOT NULL AND —- 2.1) VBUP_DEL.GBSTA != 'C' AND —- 2.2) COALESCE("VBUP_DEL"."ABSTA",'') != 'C' —- 2.3) ) ) );Create _CEL_O2C_ACTIVITY_OPS:

DROP VIEW IF EXISTS O2C_ACTIVITIES_OPS; CREATE VIEW O2C_ACTIVITIES_OPS AS ( SELECT DISTINCT _CEL_O2C_ACTIVITIES.* FROM "_CEL_O2C_ACTIVITIES" JOIN O2C_VBAP_OPS AS VBAP AND _CEL_O2C_ACTIVITIES.MANDT=VBAP.MANDT AND _CEL_O2C_ACTIVITIES.VBELN=VBAP.VBELN AND _CEL_O2C_ACTIVITIES.POSNR=VBAP.POSNR );Create O2C_VBAK_OPS:

DROP VIEW IF EXISTS O2C_VBAK_OPS; CREATE VIEW O2C_VBAK_OPS AS ( SELECT DISTINCT VBAK.* FROM O2C_VBAK AS VBAK JOIN O2C_VBAP_OPS AS VBAP_OPEN AND VBAK.MANDT=VBAP_OPEN.MANDT AND VBAK.VBELN=VBAP_OPEN.VBELN );(Optional) To further decrease loading times, it is possible to build those tables for KNA1, VBFA, LIPS as well by joining them on the respective order table VBAK or VBAP.

Execute the data job & load the data model:

Run the data job to create all tables.

Duplicate your existing data model.

Add the newly created _OPS tables to the new Data Model by replacing the same tables and load it.

Adjust schedules based on new load times. It is possible to decrease the time intervals due to the significantly reduced loading time.

Adjust the VARIABLE_OPEN_ORDER_ITEM in the Knowledge Model to reflect the same logic

variables: - id: VARIABLE_OPEN_ORDER_ITEM displayName: Filter condition Open Order Items value: ( (-- 1) order item is not yet completed nor rejected PU_LAST("VBAP", "VBUP"."GBSTA") NOT IN ('C') AND PU_LAST("VBAP", "VBUP"."ABSTA") NOT IN ('C') ) OR ( -- 2.1) for each completed, but not rejected order item —- 2) still take order item if it has -—2.2) > 0 DN item that are not yet completed nor rejected PU_MAX(VBAP, —- Take MAX of Returned Items BIND(VBFA, —- Returns # DN items for 2.2 PU_COUNT(LIPS,VBUP_DEL.VBELN, VBUP_DEL.GBSTA != 'C' AND —-2.2) VBUP_DEL.ABSTA NOT IN ('C') —-2.2) ) ) , PU_LAST(VBAP, VBUP.GBSTA) IN ('C') AND —-2.1) PU_LAST(VBAP, VBUP.ABSTA) NOT IN ('C') —-2.1) ) > 0 ) )Test if the changes are successful

After you have implemented the changes, one way to test if your adjustments have been successful is to compare the number of entries within the VBAK, VBAP and Activity Table in the datamodel.

User story: As a user of the OOP Application I would like to have additional information on orders from other sources, so that I get further insights on potential inefficiencies and take appropriate action.

Business Impact / Added Value | High - Business Partner Data Medium - Sales Order Business Data - Credit Data |

Technical Complexity | Medium - Data Integration: Creating SQL scripts to add relevant information - Studio: Add information to the Knowledge Model and Views |

Impacted by Customizations | Low Relevant Tables are SAP ECC Standard and should not be impacted by customizations. |

Notice

The VBPA table stores the different partner functions for each sales order. These various involved parties are essential for appropriate order processing, as they need to be maintained to ensure that

goods are delivered to the right address (Ship-To Party)

an invoice is directed to the right party (Bill-To Party)

Of course there is also other information that can be added and will be described below.

Add the relevant data to the extractions

The SQL statement is part of the existing connector already, however disabled, make sure to check out your connector first.

Create the tables to be added to the DM

-- Query 1 DROP TABLE IF EXISTS "O2C_VBPA"; -- Query 2 CREATE TABLE "O2C_VBPA" AS ( SELECT "VBPA"."MANDT" ,"VBPA"."VBELN" ,"VBPA"."POSNR" ,"VBPA"."PARVW" ,"VBPA"."KUNNR" ,"VBPA"."PERNR" ,"VBPA"."LIFNR" ,"VBPA"."ADRNR" ,"VBPA"."ABLAD" ,"VBPA"."XCPDK" ,"VBPA"."HITYP" ,"VBPA"."KNREF" , COALESCE( "KNA1"."NAME1", "LFA1"."NAME1") AS "NAME1" FROM "VBPA" LEFT JOIN "KNA1" AND "VBPA"."MANDT"="KNA1"."MANDT" AND "VBPA"."KUNNR"="KNA1"."KUNNR" LEFT JOIN "LFA1" AND "LFA1".MANDT = "VBPA"."MANDT" AND "LFA1"."LIFNR" = "VBPA"."LIFNR" );Add the created tables to the DM & reload DM

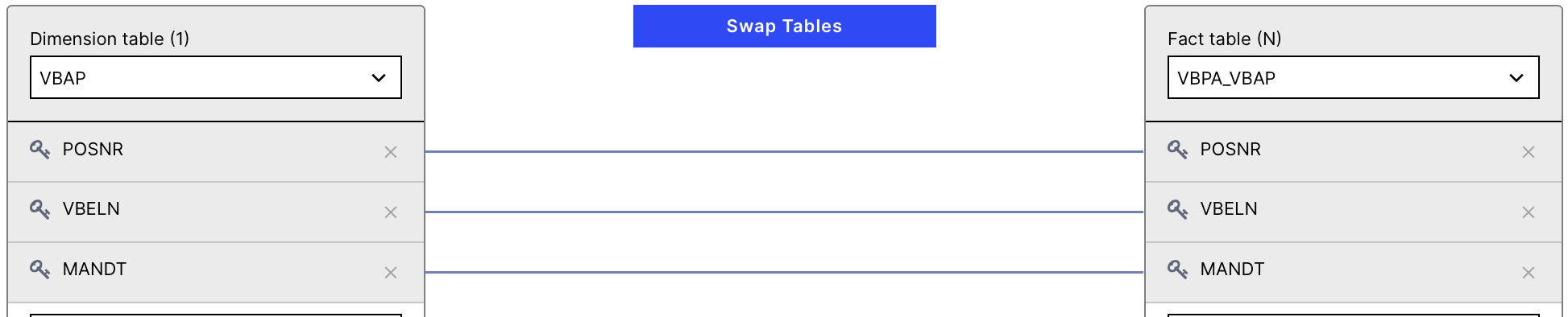

Connect the Table VBPA to the VBAP using the primary keys MANDT, VBELN, POSNR

VBPA_DM (Image)

Add the information to the KM & Views

As mentioned above, there are several use cases to be derived from information in the VBAP

Ensure that goods are delivered to the right address (Ship-to-Party) - Added to Record Order

- id: SHIP_TO displayName: Ship-to internalNote: Added as custom field pql: PU_FIRST("VBAK", "VBPA"."KUNNR", "VBPA"."PARVW" = 'WE') columnType: string - id: SHIP_TO_TEXT displayName: Ship-to internalNote: Added as custom field pql: PU_FIRST("VBAK", "VBPA"."NAME1", "VBPA"."PARVW" = 'WE')Ensure that invoices are directed to the right party (Bill-To Party) - Added to Record Order

- id: BILL_TO displayName: Bill-to internalNote: Added as custom field pql: PU_FIRST("VBAK", "VBPA"."KUNNR", "VBPA"."PARVW" = 'RE') columnType: string - id: BILL_TO_TEXT displayName: Bill-to internalNote: Added as custom field pql: PU_FIRST("VBAK", "VBPA"."NAME1", "VBPA"."PARVW" = 'RE')Have a look into the possible values for VBPA.PARVW to identify further information that might be relevant for your application. Always remember to add the

internalNote: Added as custom field. This will help during the updating of your app.

Notice

The VBKD table stores additional business data for each sales order.

Incoterms (INCO1, INCO2)

Order payment terms (VBKD.ZTERM)

Order pricing date (VBKD.PRSDT)

and many more…

Add the relevant data to the extractions

The SQL statement is part of the existing connector already, however disabled, make sure to check out your connector first.

Create the tables to be added to the DM

-- Query 1 DROP TABLE IF EXISTS "O2C_VBKD"; -- Query 2 CREATE TABLE "O2C_VBKD" AS ( SELECT V_ORDERS.MANDT AS "MANDT", V_ORDERS.VBELN AS "VBELN", V_ORDERS.POSNR AS "POSNR", COALESCE("VBKD_LI_LEVEL"."INCO1","VBKD_HEADER_LEVEL"."INCO1") AS "INCO1", COALESCE("VBKD_LI_LEVEL"."INCO2","VBKD_HEADER_LEVEL"."INCO2") AS "INCO2", COALESCE("VBKD_LI_LEVEL"."VALDT","VBKD_HEADER_LEVEL"."VALDT") AS "VALDT", COALESCE("VBKD_LI_LEVEL"."VALTG","VBKD_HEADER_LEVEL"."VALTG") AS "VALTG", COALESCE("VBKD_LI_LEVEL"."ZTERM","VBKD_HEADER_LEVEL"."ZTERM") AS "ZTERM", COALESCE("VBKD_LI_LEVEL"."PLTYP","VBKD_HEADER_LEVEL"."PLTYP") AS "PLTYP", COALESCE("VBKD_LI_LEVEL"."KONDA","VBKD_HEADER_LEVEL"."KONDA") AS "KONDA", COALESCE("VBKD_LI_LEVEL"."KDGRP","VBKD_HEADER_LEVEL"."KDGRP") AS "KDGRP", COALESCE("VBKD_LI_LEVEL"."BZIRK","VBKD_HEADER_LEVEL"."BZIRK") AS "BZIRK", COALESCE("VBKD_LI_LEVEL"."DELCO","VBKD_HEADER_LEVEL"."DELCO") AS "DELCO", COALESCE("VBKD_LI_LEVEL"."MSCHL","VBKD_HEADER_LEVEL"."MSCHL") AS "MSCHL", COALESCE("VBKD_LI_LEVEL"."MANSP","VBKD_HEADER_LEVEL"."MANSP") AS "MANSP" FROM "O2C_VBAK_VBAP" AS "V_ORDERS" LEFT JOIN VBKD AS "VBKD_LI_LEVEL" AND "VBKD_LI_LEVEL".MANDT = V_ORDERS.MANDT AND "VBKD_LI_LEVEL".VBELN = V_ORDERS.VBELN AND "VBKD_LI_LEVEL".POSNR = V_ORDERS.POSNR AND "VBKD_LI_LEVEL".POSNR <> '000000' LEFT JOIN VBKD AS "VBKD_HEADER_LEVEL" AND "VBKD_HEADER_LEVEL".MANDT = V_ORDERS.MANDT AND "VBKD_HEADER_LEVEL".VBELN = V_ORDERS.VBELN AND "VBKD_HEADER_LEVEL".POSNR = '000000' );Add the created tables to the DM & reload DM

Add the Information to the KM & Views

Add the relevant data to the extractions

KNKK

S066

S067

T691F

KNB5.BUKRS/T001S.SNAME for Collector/Dunning Clerk Name

Create the tables to be added to the DM

DROP VIEW IF EXISTS O2C_KNA1_WE; CREATE VIEW O2C_KNA1_WE AS (select DISTINCT KNA1.* from VBPA join KNA1 on VBPA.KUNNR = KNA1.KUNNR AND VBPA.MANDT = KNA1.MANDT where VBPA.PARVW = 'WE'); DROP VIEW IF EXISTS O2C_KNA1_RE; CREATE VIEW O2C_KNA1_RE AS (select DISTINCT KNA1.* from VBPA join KNA1 on VBPA.KUNNR = KNA1.KUNNR AND VBPA.MANDT = KNA1.MANDT where VBPA.PARVW = 'RE'); DROP VIEW IF EXISTS O2C_CREDIT_USAGE; CREATE VIEW O2C_CREDIT_USAGE AS ( select DISTINCT KNA1.MANDT , KNA1.KUNNR , KNA1.NAME1 , KNA1.ADRNR , KNKK.KKBER , KNKK.CTLPC , COALESCE(S067.OLIKW,0) AS "OPEN_DELIVERY_VALUE" , CASE WHEN CAST(S066.SPTAG AS DATE) <= CURRENT_DATE() + TO_NUMBER(T691F.WSWIN) * INTERVAL '1 day' THEN S066.OEIKW ELSE 0 END AS "OPEN_ORDER_VALUE" --WHEN PERIOD_TO_ANALYSE <= TODAY + HORIZONT --Then Take S066.OEIKW --RETURNs ORDER-VALUE if it is within TIME-PERIOD, else 0 --Base on Data - if multiple orders are open, they account only to a certain timeframe in the Open order Value -- PERIOD_TO_ANALYSE is taken from ORDER-DATA, HORIZONT is taken from CUSTOMER-CREDIT DATA -- Example 2 Open Orders, HORIZONT = 10 days, -- ORDER1 - Value 1000, Due in (=PERIOD_TO_ANALYZE) 10 Days, OPEN_ORDER_VALUE = 1000 -- ORDER2 - Value 5000, Due in (=PERIOD_TO_ANALYZE) 60 Days, OPEN_ORDER_VALUE = 0 , S066.SPTAG AS "PERIOD_TO_ANALYZE" , T691F.WSWIN AS "HORIZONT" FROM O2C_KNA1_RE AS KNA1 LEFT JOIN KNKK on KNA1.MANDT = KNKK.MANDT AND KNA1.KUNNR = "KNKK"."KUNNR" LEFT JOIN S067 on KNKK.KUNNR = S067.KNKLI AND KNKK.KKBER = S067.KKBER LEFT JOIN S066 on KNKK.KUNNR = S066.KNKLI AND KNKK.KKBER = S066.KKBER LEFT JOIN T691F on KNKK.CTLPC="T691F"."CTLPC" AND "KNKK"."KKBER" = "T691F"."KKBER" T691F.CRMGR = '01' );

Add the created tables to the DM & reload DM

Add the Information to the KM & Views

Add the following information as attributes to the record ORDER

- id: KKBER displayName: Credit control area pql: PU_FIRST(VBAK,BIND(VBPA,KNKK.KKBER), VBPA.PARVW='RE') filterIds: [] columnType: String - id: KLIMK displayName: Customer's credit limit pql: PU_FIRST(VBAK,BIND(VBPA,KNKK.KLIMK), VBPA.PARVW='RE') filterIds: [] format: ",.2f" - id: SKFOR displayName: Total receivables (for credit limit check) pql: PU_FIRST(VBAK,BIND(VBPA,KNKK.SKFOR), VBPA.PARVW='RE') filterIds: [] format: ",.2f" - id: OPEN_DELIVERY_VALUE displayName: Open Delivery Value pql: PU_FIRST(VBAK,BIND(VBPA,PU_FIRST("KNA1_RE",O2C_CREDIT_USAGE.OPEN_DELIVERY_VALUE)),VBPA.PARVW='RE') filterIds: [] format: ",.2f" - id: OPEN_ORDER_VALUE displayName: Open Order Value pql: PU_FIRST(VBAK,BIND(VBPA,PU_SUM("KNA1_RE","O2C_CREDIT_USAGE"."OPEN_ORDER_VALUE")),VBPA.PARVW='RE') filterIds: [] format: ",.2f" - id: CREDIT_LIMIT_OVER_UNDER displayName: Over/Under --Defines the current credit limit utilization pql: > PU_FIRST(VBAK,BIND(VBPA,KNKK.KLIMK), VBPA.PARVW='RE') --Customer’s Credit Limit - PU_FIRST(VBAK,BIND(VBPA,KNKK.SKFOR), VBPA.PARVW='RE') --Total receivables - PU_FIRST(VBAK,BIND(VBPA,PU_FIRST("KNA1_RE", O2C_CREDIT_USAGE.OPEN_DELIVERY_VALUE)),VBPA.PARVW='RE') --Open Delivery Value - PU_FIRST(VBAK,BIND(VBPA,PU_SUM("KNA1_RE", "O2C_CREDIT_USAGE"."OPEN_ORDER_VALUE")),VBPA.PARVW='RE') --Open Order Value filterIds: [] format: ",.2f"

Important

A common problem with long running transformations and slow data model loads is due to missing statistics in Vertica. This issue can be resolved by adding the Vertica ANALYZE_STATISTICS statement directly in the SQL. For more information, refer to Vertica Transformations Optimization.