Create a Data Connection that supports the Replication Cockpit

In order to use the Replication Cockpit, you first need to create a Data Connection that supports it. There are two options:

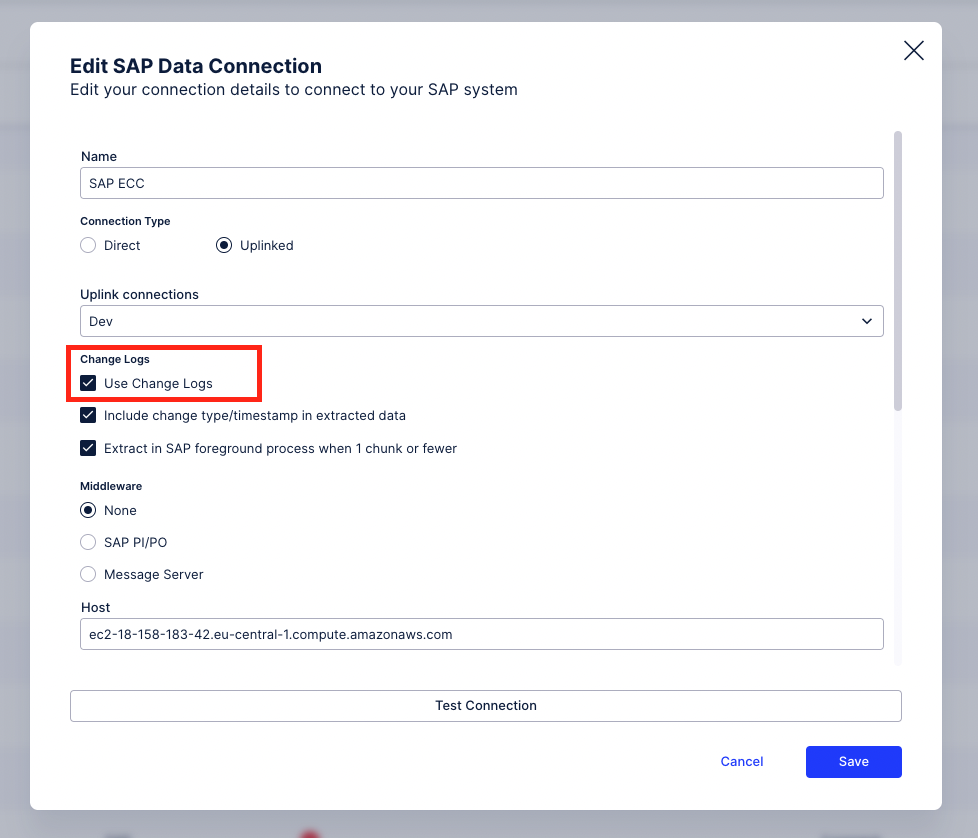

SAP: Select the "Use Change Logs" configuration in the Data Connection:

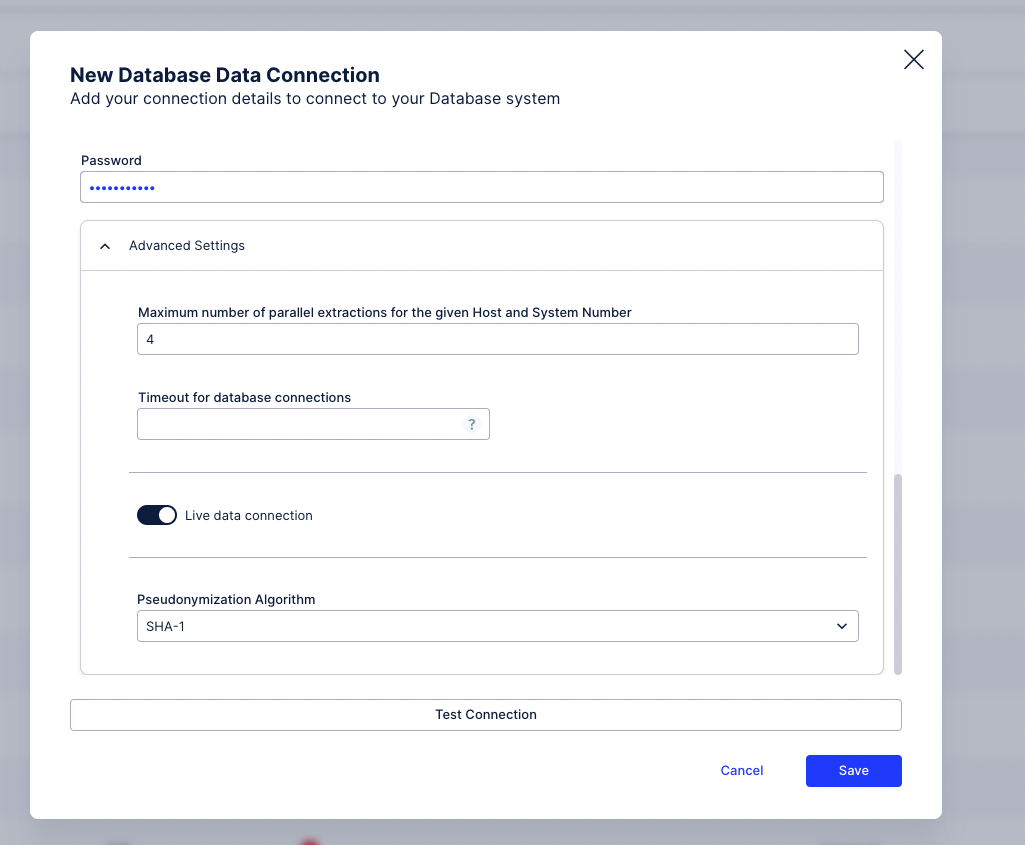

Database: Toggle the "Live Data Connection" in the Data Connection:

Add Tables to the Replication Cockpit

Contents

Adding tables to Replication Cockpit

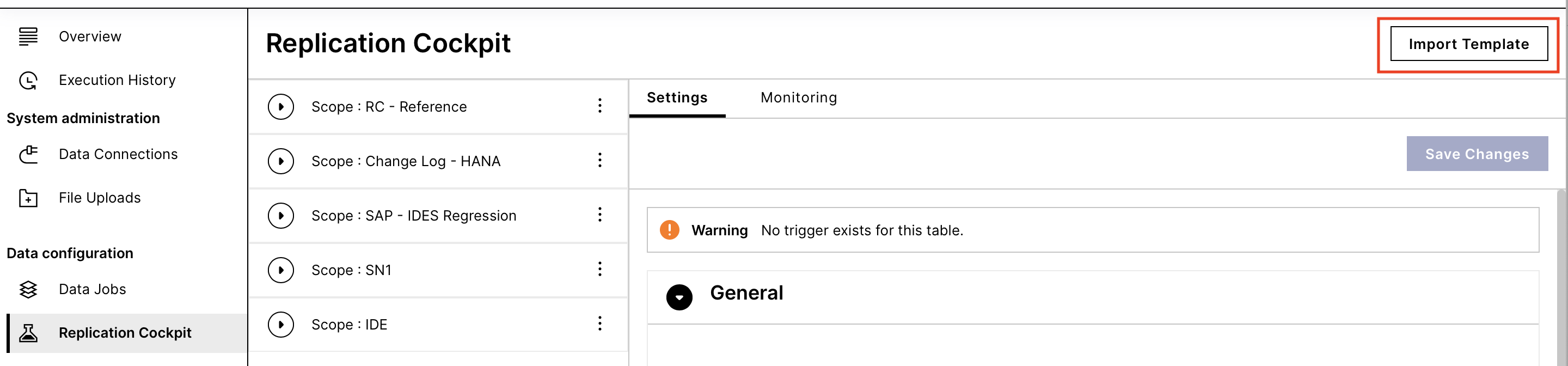

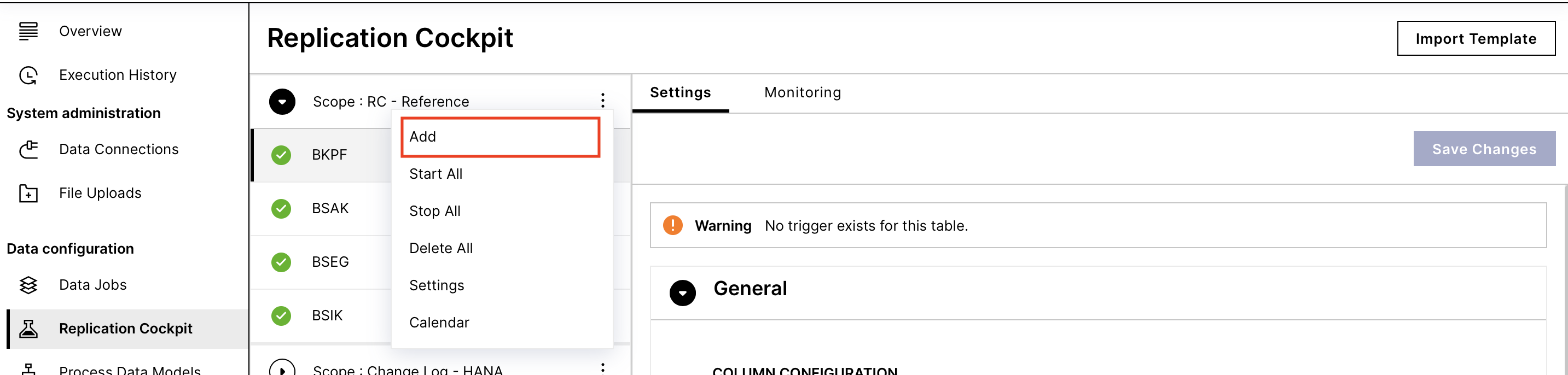

When you first navigate to the Replication Cockpit, all the SAP connections where the real-time mode (ChangeLog) is activated are displayed there. To replicate a table in the real-time mode you have to add it to the respective RC connection. This can be done via the built-in process templates, or by adding the tables one by one.

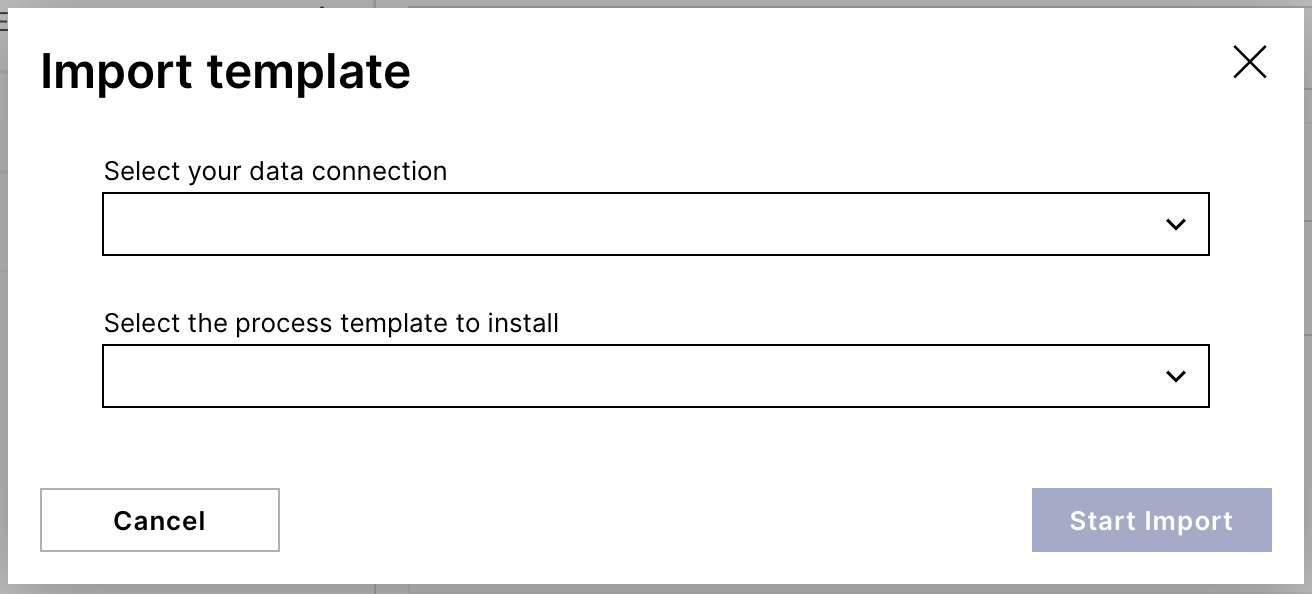

Activating a process from a template

Adding a table manually

Warning

Note that you should add ONLY the tables for which the triggers have been activated in SAP.

While CDPOS and CDHDR are exceptions and you can use them without change logs, we strongly recommend to create a change log for them too. Change Logs for these tables are supported for S/4 HANA systems, and also SAP ECC on MS SQL and Oracle databases (with the latest RFC Module).

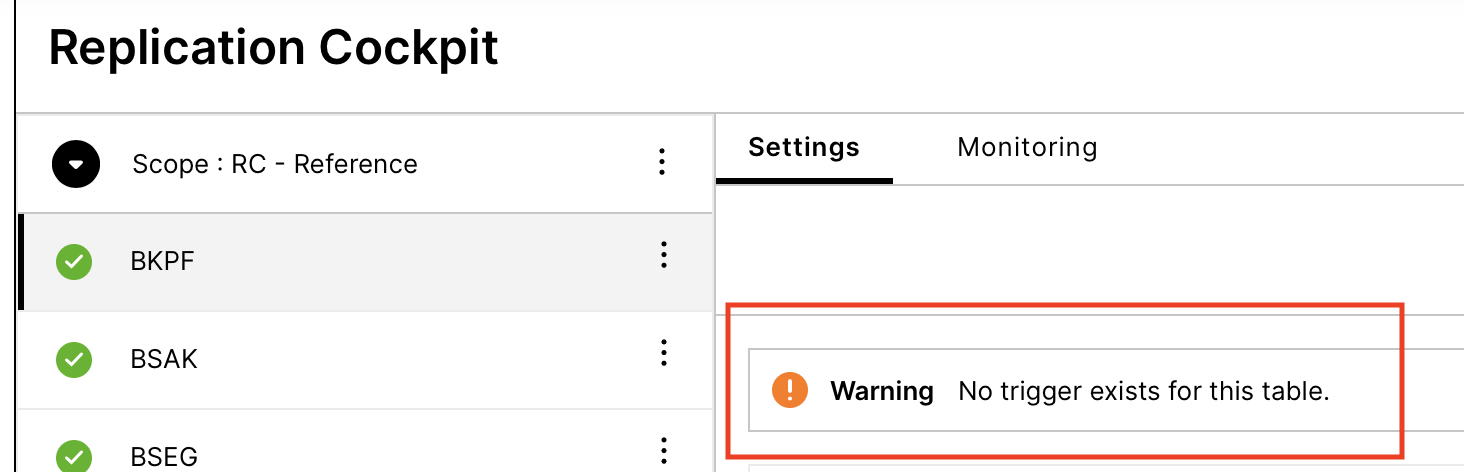

In case the added table does not have a trigger/changelog, you will see a warning that these objects do not exist in SAP.

Adding Views to the Replication

Starting from Extractor v. 3.10.0 and RFC Module 3.1.4, replications from the views in SAP are supported too. The feature has been tested against CDS and Database views that have no parameters. Theoretically any view that exists in the ABAP dictionary is supported.

Tip

Whether the View exists in the ABAP dictionary or not can be checked in the transaction code SE11. If the View is available there, then it can be replicated.

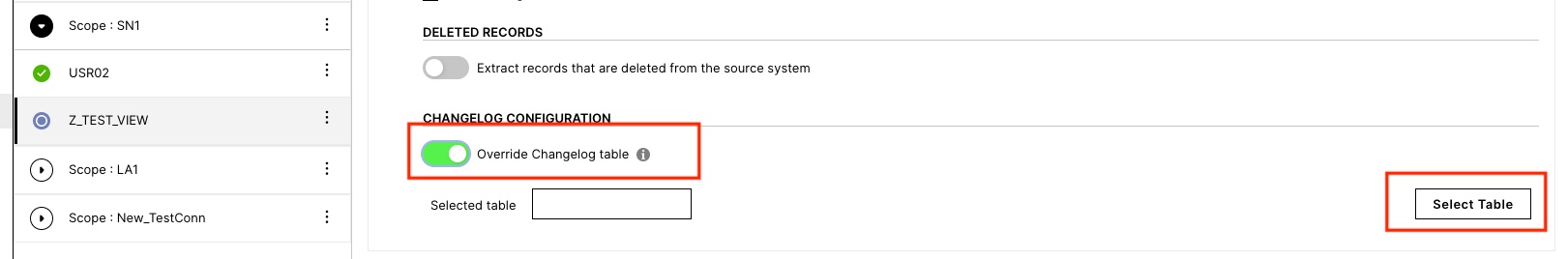

Views are different from the tables since you cannot install a trigger on it to capture the updates in the respective Change Log. Instead, the underlying table of the view is used to track the updates in a Change Log. We afterward replicate the view using that Change Log. The prerequisite is that both the view and the table have the same primary keys.

After selecting the view, you have to enable the "Override Changelog table" switch, and select the table on which the View is based. Replications will use the Changelog of that table to identify the delta in the view. The prerequisite is that the table real-time extraction setup, i.e. creating the Change Log and the Trigger, has already been completed for the table in SAP.

|

Table Configurations

After adding the table you can define the following extraction configurations:

Select/deselect and pseudonymize columns

Override the target table name in Celonis Platform

Apply a filter statement

Define an Initialization Join configuration → this join will only be applied for Initializations (Full Loads), but not to Replications. Its purpose is to be able to filter on certain date columns (start date) that are not present in the table itself but require the join on another table.

Note

In Replication Cockpit, there is no concept of delta filters anymore. The SAP real time extractor ensures that replications capture the delta automatically.

After you are done with the table setup, click start. You can do this action also in bulk from the connection level.

Define the Deletion Option

You can select between three different deletion options on table level:

|

Directly delete data from the Data Pool table

In case a deletion in an SAP table happens it will be captured in the changelog via the trigger. By selecting this option, the deleted records will directly be deleted from the table in Vertica after the Extraction. Please note, that data that is archived in SAP is also captured as a deletion and therefore deleted in Celonis. With this option, you will not be able to do transformations with the deleted data (for this you can refer to option 2).

When should I choose this option? This option allows you to exactly mirror the table of your source system in Data Integration. If you do not regularly archive data in SAP and it is fine to have archived data deleted in Celonis, then choose this option.

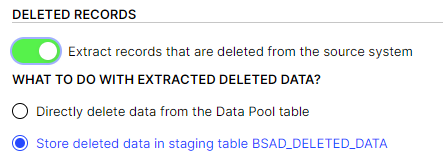

Store deleted data in staging table TABLE_DELETED_DATA

This option pushes all records that are captured as deletion to a separate staging table 'TABLE_DELETED_DATA'. The records are not directly deleted from the table in Vertica. There is no mechanism to clean the staging table automatically.

When should I choose this option? This option allows keeping track of the deletions without directly deleting all the respective records. The staging table can be used in a batch Data Job to delete all the records in the respective tables by doing a timestamp comparison.

Script example

Script to apply the deletions based on the deletion staging table (can be applied either in Replication Cockpit or in Data Jobs)

DELETE FROM BUT100 WHERE EXISTS (SELECT 1 FROM TABLE_DELETED_DATA as DEL_DATA AND TABLE.PRIMARY_KEY_1 = DEL_DATA.PRIMARY_KEY_2 --respective primary keys of the table need to be used AND TABLE.PRIMARY_KEY_2 = DEL_DATA.PRIMARY_KEY_2 AND COALESCE("TABLE"."_CELONIS_CL_TIMESTAMP", DATE(1)) < TO_TIMESTAMP(DEL_DATA."_CELONIS_CL_TIMESTAMP", 'YYYYMMDDHHMISSUS') --compares the timestamps and checks whether the deletion is actually valid );Script to clean up the deletion staging table (needs to be executed after the previous script)

TRUNCATE TABLE TABLE_DELETED_DATA;

Important

We recommend truncating the Deletion Staging Table instead of deleting it (after processing the records). Otherwise, it could lead to failing transformations.

Do nothing This option does not extract deletions at all.

When should I choose this option? When deletions are not relevant for you or don't appear on the respective table.