Using Imported Tables in the P2P ‘Local’ Jobs

Although this is not currently in use in the standard configuration of the P2P connector, it is actually possible to import tables from other data pools and ‘push’ them to the local pool of the P2P connector.

Example Use Case

If you are already extracting and using some of the P2P specific tables in the other data pools that you work with, for example EBAN, it is not required to re-extract them in the local job of the P2P connector. You could instead follow the example workflow below:

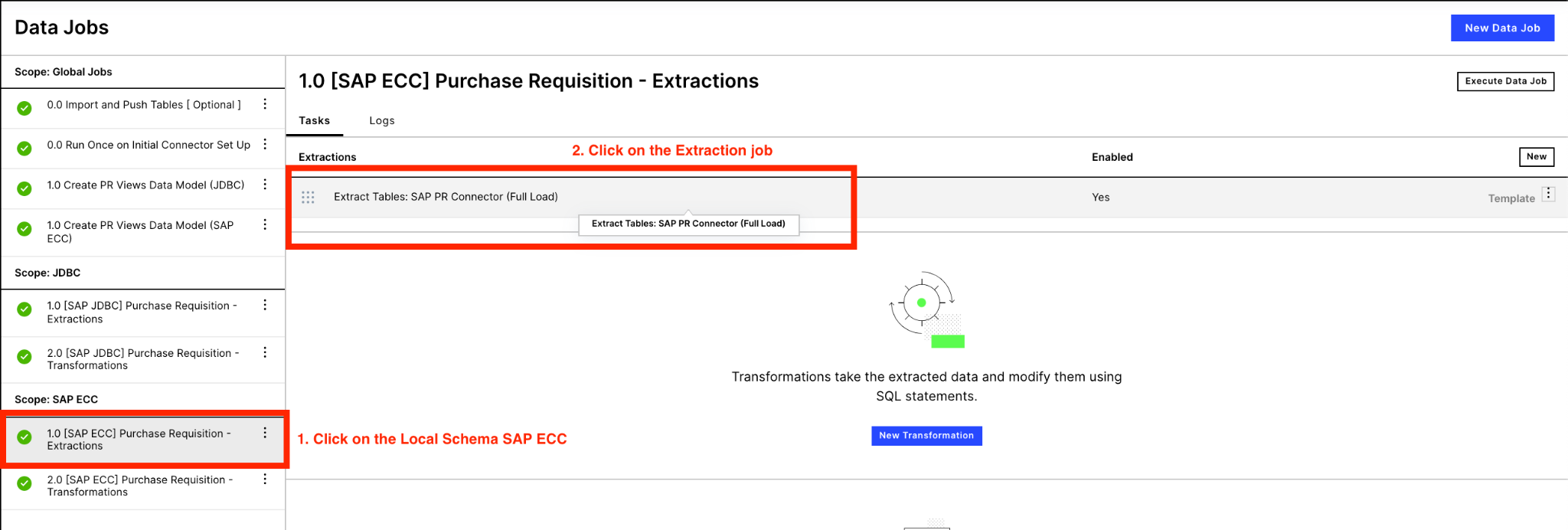

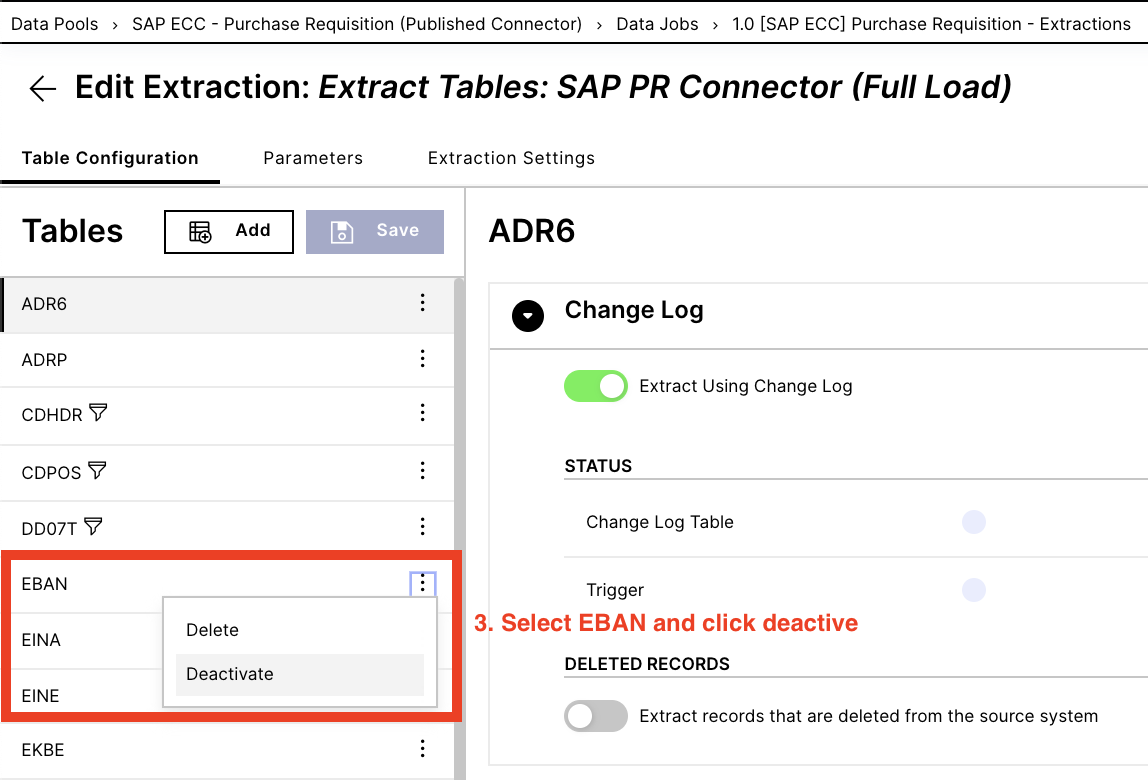

Click into into the relevant extraction and then disable the desired table:

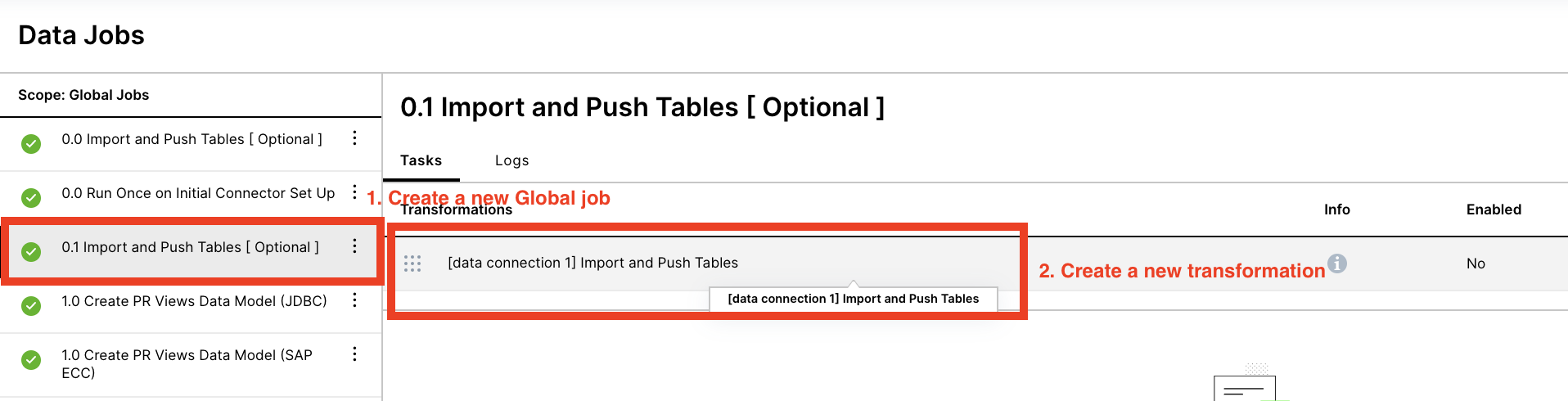

Navigate to the global job section and create a new global job named “0.1 Import and Push tables”. Then create a new transformation:

Inside of the transformation, the objective is to create a table in the local job based on the table from the source data pool. It is important that you update the data connection parameter in the FROM statement, to the parameter of your system.

DROP TABLE IF EXISTS <%=DATASOURCE:SAP_ECC%>."EBAN"; -- Target Local job and table you want to push to and create CREATE TABLE <%=DATASOURCE:SAP_ECC%>."P2P_EBAN" AS ( -- Target Local job and table you want to push to and create SELECT * FROM <%=DATASOURCE:SAP_ECC_-_PURCHASE_TO_PAY_SAP_ECC%>."EBAN" -- source table in source pool );Incorporate the new global job into your data job scheduling, such that these import and push transformations are executed prior to the Local P2P data job. This ensures that the EBAN table (from this example) is updated in the local job prior to the local transformations that depend on it are run.