Creating extraction tasks using the visual editor

As the name suggests, extraction tasks allow you to select the data tables to be extracted from your source system (and imported into the Celonis Platform). When configuring your extractions, you can apply time filters, add pseudonymization, and join tables to each other.

You can either create extraction tasks manually using the visual editor from your data jobs or edit existing extraction tasks (whether originally manually created or as part of a process connector).

In addition to the visual editor, you can also create extraction tasks using the Extractions Editor and AI Assistant, see: Extractions Editor and AI Assistant overview.

Note

The extraction features and settings available are dependent on the source system you are connecting to. For an overview of supported features, see:

For a video overview of extraction tasks:

Creating and managing extraction tasks using the visual editor

To create an extraction tasks from your data pool diagram using the visual editor:

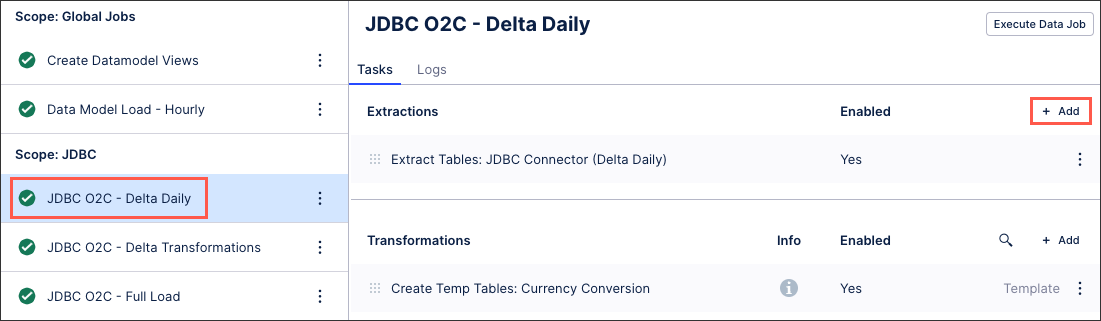

Click Data Jobs and select an existing data connection scope.

In the extraction row, click + Add.

Add an extraction task name (an internal reference only) and click Save.

The task is created and displayed.

Edit your extraction task configuration, parameters, and extraction settings as required. See: Extraction task configuration.

Options include removing

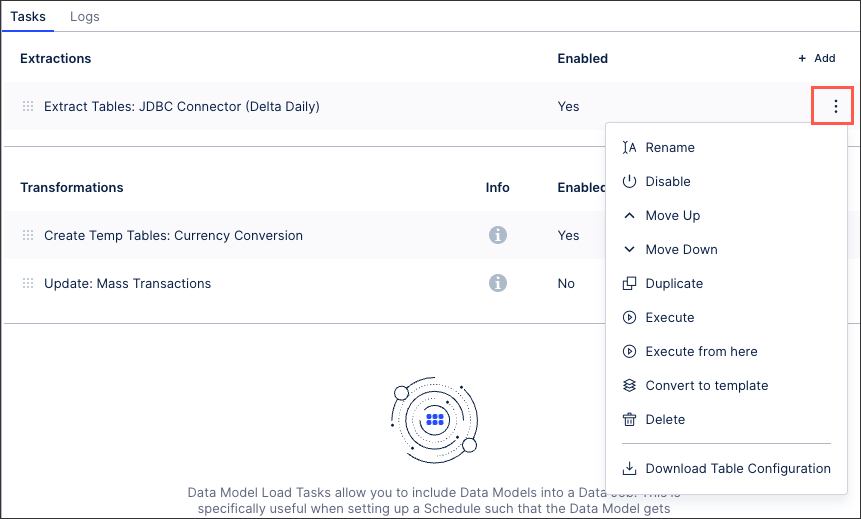

You can manage existing extraction tasks by clicking Options.

|

You have the following options here:

Rename: Update the name of the extraction task.

Enable / disable: Control whether the extraction task should be enabled or disabled for executions.

Move up / down: Change the order in which this task is performed in a full execution.

Duplicate: Create a copy of the extraction task in the existing data job.

Execute: This allows you to manually execute just this task on demand. For more information about executing data jobs, see: Executing data jobs.

Execute from here: This allows you to manually execute this and all following tasks on demand. For more information about executing data jobs, see: Executing data jobs.

Convert to template /copy to regular task: The task becomes a template and can be added to other data jobs or used to extend the template. If the task is already a template, you can create a regular task from it. For more information about task templates, see: Creating data job task templates.

Delete: This deletes the task and all associated content, with no recovery possible.

Download table configuration: This gives you offline access to a zipped file containing any relevant Excel workbook copies of your table configuration.

When creating or edit an existing extraction task, you have the following configuration options available:

Table configuration

This is where you select and configure the data tables you want to extract. Depending on your data connection type, you have the following table configuration options:

Column subset: Specify which columns should be extracted by clicking Configure next to the column count.

Pseudonymized columns: As part of the advanced settings for data connections, you can pseudonymiz the data you extract using SHA-1, SHA-256 (with and without salt), and SHA-512 (with and without salt) algorithms. If you select to pseudonymize your data, values as displayed in the source system will be replaced with hashed values.

Pseudonymization happens during the data extraction. Celonis requests source system data, then pseudonymizes that data upon receipt, converts it into parquet format, and then ingests this into the Celonis Platform.

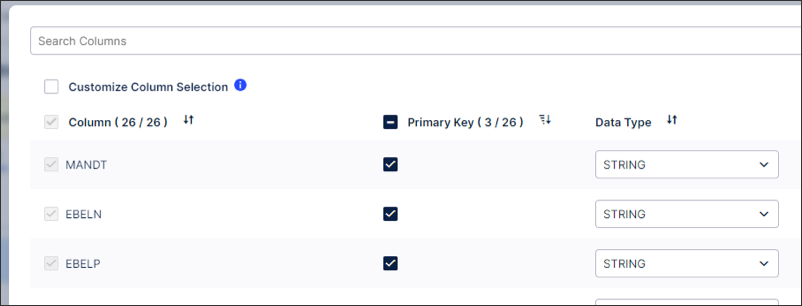

Primary key columns: You can explicitly specify which columns should be used as primary key columns during a delta load. This is only necessary if the source system does not provide this information on its own. Overriding the primary key columns does not change how data is stored in the database. It is only used for delta loads and not saved.

Note

There are several reasons duplicate records can appear on the table. One of the reasons is sanitization of records carried out by the data ingestion process.

Sanitization logic removes characters from categories: Cf, Co, Cs, Cn (Other, not assigned) and replaces line endings with \n. The sanitization is done to ensure there are no errors while uploading data to Celonis.

We have observed that these characters appear in Primary Key columns and as we remove them the records are flagged as duplicates. It is recommended that users select primary keys carefully that have true unique values. In other words, the two primary key records ideally do not differ just by one special character. We also recognise that in some cases special characters are unavoidable.

In that case, we recommend that users add another column to the primary key (eventually have a Composite Key).

We are looking for ways to get rid of this logic. This requires a deep investigation so that we do not introduce any issues. Until then please follow the recommendation to avoid running into issues.

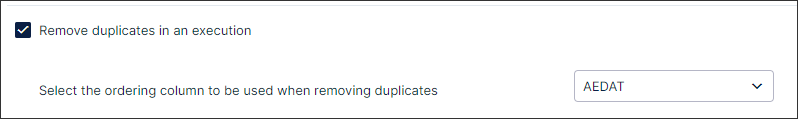

You can remove duplicate records when executing your JDBC extraction tasks by selecting the ordering column to be used:

|

This feature uses the primary key configuration to determine what records are duplicated and then removes that duplicate based on the ordering column in descending order. As a result, we recommend using a timestamped column as your ordering column. When two or more duplicates are found, the oldest record will be removed.

In this example, we're using the columns MANDT, EBELN, and EBELP.

|

You can add one or multiple join partners to the table. Each join partner can either be joined through the primary keys of the tables or through a custom join path. In order for the primary key join to work, the primary keys of the table to be joined need to be included in the primary keys of the base table. You can also add a filter for each joined table.

When creating joins in your extraction, you use child tables and parent tables:

Child tables: To be extracted table.

Parent tables: Joined table to filter the child table.

These tables are then executed in the following order:

The extraction will first apply the indicated filters on the parent table as visible on the right hand side.

The join of the parent table to the child table is conducted. This join is based on java and is mostly comparable with an inner join in SQL.

The additional filters are applied to the table resulting after the join.

You then have the following additional information:

"Use primary key" means the parent primary key.

If you join a child (n) table to a to be extracted parent (1) table on the primary key the extracted table will contain duplicates.

If there are several parent tables, the parents are filtered and then joined top down, before the resulting table is joined to the child.

Creation date filter: A filter on a data column that will be used in both full and delta loads. This filter will be combined with the "Filter Statement" under "Additional Filters" with an AND condition. So both conditions must be met.

Change date filter: A filter on a date column that automatically looks for the maximum date in the existing table and sets a filter to only extract data newer than this maximum date. This filter will be combined with the "Delta Filter Statement" under "Additional Filters" with an AND condition.

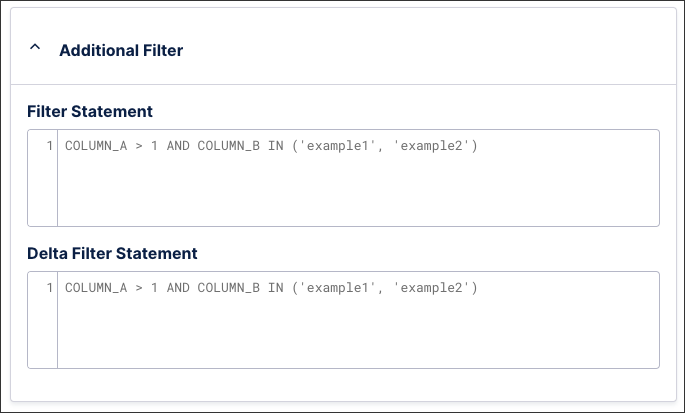

Filter statement: Using SQL syntax, specify which rows will be extracted. For example:

COLUMN_A > 1 AND COLUMN_B IN ('example1', 'example2')Delta filter: Using SQL syntax, specify which additional filters should be applied when the job executes a delta load. This filter statement is combined with the normal filter with the logical AND operator. For example:

COLUMN_A > 1 AND COLUMN_B IN ('example1', 'example2')

|

Extraction parameters

By creating and managing extraction parameters, you can control which data is extracted from your source system based on matches to your criteria.

When creating extraction parameters, you can select between private, public, and dynamic:

Dynamic extraction parameters: Delta filters are used to define which entries in the table are loaded in the delta load. Best practice is to use dynamic parameters with the operation type FIND_MAX for delta filters as they indicate the maximum value of a defined column. If the indicated table does not exist in the Celonis Platform or is empty, the DEFINED VALUE is utilized. If this VALUE does not exist, the DEFAULT VALUE is utilized.

Private (static / default): Only admins can see and edit; value is fixed.

Public (static): Admins can see/edit; but other users (when installing connectors or similar) might also have ability to see/edit in some contexts.

Table and column references

When adding your table and column references to your extraction parameter configuration, enter the name only. Adding {brackets} or “NAME“ will result in an error.

For example, the table NAME should be added as: NAME, rather than "NAME" or {NAME}.

Extraction settings - Debug mode, delta load configuration, connector parameters

The available extraction settings depend on the source system that you're connecting to.

Debug mode: Once enabled, the debug mode provides detailed log information for the data extraction job and will be displayed in the execution logs. This allows for more transparency and easier troubleshooting. This mode is active for three days and the logs created are then deleted.

Delta load configuration: Select from either:

Option A - Standard: The delta load will be cancelled, when metadata in your source system changes (i.e. new columns) compared to already extracted data in the IBC. Metadata changes will not be ignored. A full reload or manual changes of your table is necessary to clear this conflict.

Option B - Including metadata changes: The delta load will include metadata changes (i.e. new columns) and run through with a warning. Only delta loaded rows will include data for the newly added columns. Rows which are not part of the delta load will have the newly added columns nullified. This can lead to inconsistency in your data. A full load is recommended for data consistency.

Connector parameters: The following connector parameters can be configured:

Batch size: Allows specifying the batch size (in records) for one extraction request.

Max string length: Allows the modification of the default length (80 characters) of String-type columns.This is configured using the parameter: MAX_STRING_LENGTH

Binary data type handling: Table column with binary data type can be represented in two ways: UTF- 8 or HEX_NOTATION. Depending on the value specified here the binary value will be converted.

Change default metadata source: Depending on your database, you can select from:

DRIVER_METADATA: This metadata source is supported by all source systems and mostly it is the default one. Here the driver internally runs the metadata Query against the source system and fetches the result set.

SAMPLE_QUERY: This metadata source is supported by all source systems. This also works the same as driver metadata, only the query used is different.

INFORMATION_SCHEMA: This metadata source is supported mainly by Oracle system. And it's a default metadata source for Oracle 11g.

PG_CATALOG: This metadata source is supported by Amazon Redshift. And it's a default metadata source.

Limit total number: Set the maximum number of records to be extracted per job.

Extraction preview

Extraction preview is available only for JDBC connections. For the complete list of functionalities available for our commonly supported databases connections, see Databases.

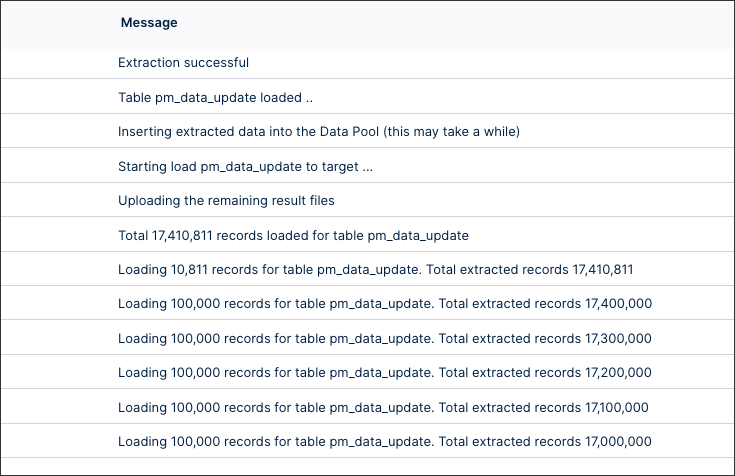

Extraction progression logs

The extraction progression logs are a visual mechanism to let the user know that the extraction is progressing well. The logs are shown for every 100k records and are independent of the batch size.

|